Earlier this month Dr Neil Geddes gave a fascinating presentation at the BCS on “Data Processing at the Large Hadron Collider”, describing how LHC experiments create 1 Billion events per sec of which they can record in detail 100 events per sec. Neil’s backgrounder on particle physics was certainly worthy of a an award for the best Dummys Guide, covering everything from molecules and atoms to leptons, mesons, baryons etc. The role of the LHC is to help discover new particles like the Higgs boson, as current astronomical observations don’t fit current physics theory (such as the rotational speeds of stars indicating some unknown gravitational force on galaxies).

Earlier this month Dr Neil Geddes gave a fascinating presentation at the BCS on “Data Processing at the Large Hadron Collider”, describing how LHC experiments create 1 Billion events per sec of which they can record in detail 100 events per sec. Neil’s backgrounder on particle physics was certainly worthy of a an award for the best Dummys Guide, covering everything from molecules and atoms to leptons, mesons, baryons etc. The role of the LHC is to help discover new particles like the Higgs boson, as current astronomical observations don’t fit current physics theory (such as the rotational speeds of stars indicating some unknown gravitational force on galaxies).

The LHC uses a proton collider to create new particles: the rates of the experiment are approximately:

- 40MHz of “proton bunch” collisions

- which give 10E9 Hz proton collisions

- which give 10E-5 Hz particle production

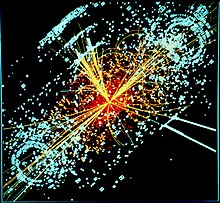

So one part of LHC experiments are to do with creating these particles, and the other part is “Complex Event Detection” – detecting and tracking the particles that fly out due to the proton collisions – and then doing data processing to try and reconstruct the “collision event” as it happened…

From an event detection perspective, as one would expect, the detector hardware is complex and layered to detect different types of particles:

- Silicon CCD strips (similar conceptually to a digital camera sensor) providing 66M channels (c.f. camera pixels) detecting at 45MHz; these measure the curvature of particle tracks in a magnetic field, from which particle momentum can be calculated

- Calorimeter: measures the energy of particles by their absorption (and subsequent heat generation)

- Lead Tungsten crystals which create measurable light when heavier particles are absorbed, using layers of brass and crystals (with the brass apparently sourced from redundent Soviet artillery shells !)

- Drift chambers that provide the same function as the layer-1 silicon strips but are much larger and hence with fewer readout channels

The instrumentation of these sensors also has to deal with the issue of detector latency, where for example a single calorimeter reading could include the impacts of many events. The design of the sensors tries to mitigate this as much as possible – for example the average occupancy of the silicon detectors is designed to be 2%.

In terms of event rates, the numbers are staggering: 1 Bn proton-proton interactions per sec means 1000 particle tracks created every 25 ns which leads to 100Pb event data per sec which needs to be mapped to 100Mb per sec. So the approach in event processing is to make successively more complex decisions on successively lower data rates. These conversions are:

- 40Mhz reduced to 100KHz via custom electronics

- 100KHz reduced to 1KHz initial processing

- 1KHz reduced to O(100Hz) or 3-10ms per event.

For one of the LHC particle colliders (CMS: the others are known as Atlas, Alice, and LHCb) the event processing is done via a PC grid (up to 150K CPUs) using the approach of (a) identify high energy particles and (b) network routes each event data to a specific process. They use a huge disk buffer at the expirement source in case of network failure, but otherwise distribute processing in a cross-Europe WAN (such as the CERN-UK connection handling 10Gb per sec).

Interestingly they have found that:

- the network has proved much better than expected so the nodes are increasingly being used for data caches

- CPU use has more than doubled in the last ~18months

Also interesting is how much more hype there is for “big data” (MapReduce, Hadoop, et al) versus developments in “extreme event processing” like that done at LHC.

Note: the presenter used the term “data processing” whereas I deliberately used the term “event processing”. Actually both are involved: event detection and initial processing, then distribution / storage and ~batch-type data processing.