According to a study done by IDC in 2017, cloud computing architecture is expanding seven times faster than non-cloud computing and, overall cloud services are expected to grow 24% to $122 billion this year. Despite enormous growth in cloud computing architecture, there are still a few limitations to its seemingly boundless use cases.

The primary concern with relying on public cloud infrastructure is increased latency compared to on-premises performance. Using the public cloud for analysis and communication to invoke a local device to act can take much longer than desired. Even in the most efficient network, data cannot move fast enough. The latency generated at the protocol level, network congestion and physical limitations will restrict the transmission speed of the data.

A great example of a system that would benefit from very low latency is a healthcare IoT system. In this scenario, data from sensors connected to mission-critical systems needs to be analyzed in near real time. There’s no time to wait for data to be transmitted to a data center many thousands of miles away, processed, and then sent back.

The answer to latency in the public cloud

To address latency in public cloud computations, an edge computing device is deployed closer to the sensors. And, this device is not just a simple data collection device. An edge device has additional resources to run local compute, storage, and network services.

The edge device collects data from surrounding IoT sensors and either processes it right there and sends instructions for action or it sends the data back to a centralized location for heavier processing. As the IoT network grows, so will the amount of edge computing. The two go hand-in-hand.

The next generation of applications will rely on the edge to deliver enhanced user experiences

What’s really interesting is even though the edge computing layer was originally designed to address IoT scenarios, it is evolving to tackle some of the emerging use cases of augmented reality, virtual reality, and inferencing machine learning (ML) models.

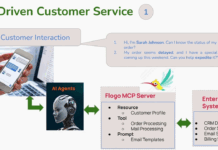

Innovative tech like AI can be greatly enhanced by edge computing. You need to make decisions fast and act on them faster. Even though the heavy lifting involved in training complex machine learning models takes place in the public cloud, the optimized model would be deployed at the edge for inferencing. The next generation of applications will rely on the edge to deliver enhanced user experiences.

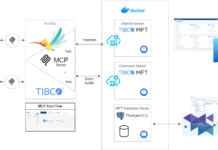

To do all of this, you need applications like TIBCO Flogo and TIBCO Cloud Integration. These solutions can bridge the gap between existing line-of-business apps, devices connected to the edge computing layer, and the public cloud to deliver tight integrations between applications running on-premises with contemporary microservices deployed in the public cloud.

To learn more about how TIBCO can help you take advantage of this new breed of applications, read “Bringing Intelligence to Edge Computing for Machine Learning.”