As consumers, we have grown to expect that information will be pushed to us in a timely, relevant, and contextual fashion. We learn about key world events (and some that are not so key!) from information streams such as Twitter. Various social media platforms have made it possible to know what people are doing every minute of every day. The emerging IoT (Internet of Things) world is also promising to provide us with connected, immediate information across not only people, but also between billions of people and devices.

However, what happens when a person steps into the enterprise? This timely, relevant, and contextual delivery of data is not always the norm. Decisions are made on stale information, data (if you can find it) is simply broadcasted to everyone regardless of relevance, and responses are reactive instead of proactive. In the consumer world, this situation would be similar to running a social media “query” such as “select * from my_friends where going out_tonight = true.” Not very effective and not very timely.

One source of this decision latency is the traditional data processing model. Information is stored, analyzed, and then actions are taken. This process can simply take too long in situations where timeliness and responsiveness is important. Opportunities to interact with your customer are missed, the chance to predict equipment failure passes, and processes are slow to respond to changing business conditions. So how can we enhance this traditional model and provide the ability to be proactive?

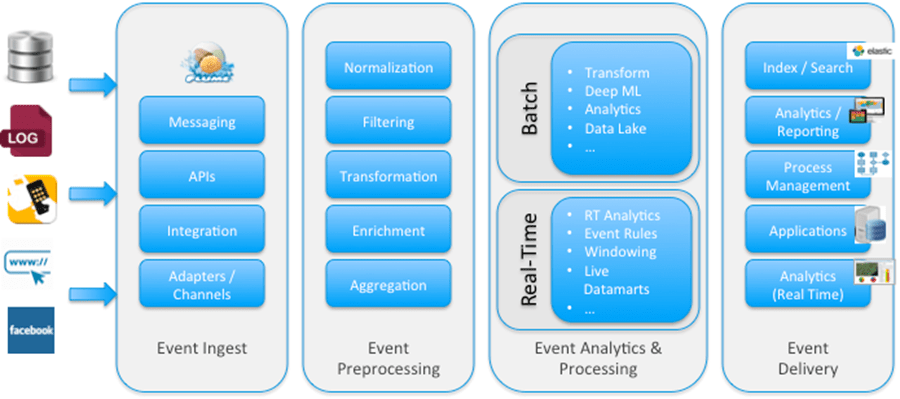

One approach is to look at continuously capturing key business “signals” or events from a variety of sources and then applying real-time rules against these events in order to automatically determine the appropriate responses. These rules may reference information (state) that has been gathered over time (correlating what is happening “now” with what has happened in the “past”) and they may contain statistical or machine-learning models in order to determine patterns or “predictions” in the rule logic. To make this approach effective, it also needs to address the various stages of the event processing pipeline:

The details behind each stage will be covered in a follow-up post, but for now it is sufficient to look at this pipeline as a series of four main stages:

- Event Ingest: Receiving events from a variety of systems, at different velocities and over different protocols.

- Event Preprocessing: Applying various functions against the events for the purposes of normalization, filtering, transformation, enrichment, and aggregation.

- Event Analytics and Processing: Executing the core “logic” behind the pipeline, and typically deployed in parallel to a more batch-oriented processing environment (e.g. Hadoop).

- Event Delivery: Delivering the identified responses and actions to the appropriate recipients and systems, again via a number of mechanisms.

From TIBCO, one system that provides these processing capabilities is the TIBCO BusinessEvents platform. BusinessEvents combines the power of event processing, rules, and state management into one cohesive platform, and is a solution that has been deployed successfully in numerous key projects across the globe. Customers use the platform to monitor key business transactions in real time, provide real-time/relevant contextual offers to customers, and execute business rules in response to customer activity.

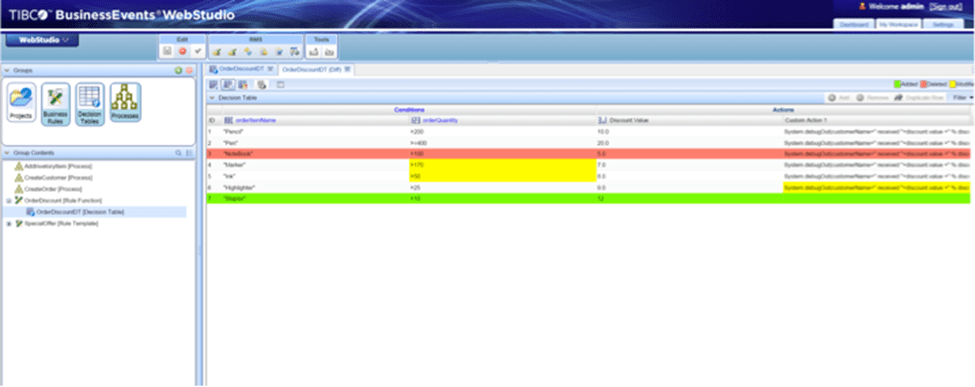

With the new BusinessEvents 5.2 release, TIBCO now makes it even easier for the power of event-driven rules to be exposed to the business user. The WebStudio feature has been enhanced with additional rule editing and management capabilities, and users can now work with the process management capability directly through this feature. For those situations where users would like to embed the power of BusinessEvents into their own applications, the new API can be used to present the platform without having to utilize the bundled web interface. This broadens the audience that can work with the BusinessEvents platform, and increases the ability of the business to “self-manage” their rules and logic.

Of course, the regular BusinessEvents features remain, including its graphical modeling environment, support for various rule definition metaphors (inference rules, template rules, decision tables, snapshot/continuous queries, state models), and its underlying distributed in-memory persistence layer. These features continue to evolve and improve as the solution is rolled out across more organizations and verticals.

By incorporating an event-driven approach into your architecture, organizations can move towards an information delivery paradigm that mimics many of our experiences in the consumer world. Opportunities or threats can be detected early, and responses generated within a time period that maximizes their effectiveness and business value. BusinessEvents is the platform on which this is possible. Now, if only I could stop my friends from providing too much information!