Part 4 in a series by James Odell on agent technologies and event processing. Part 1 covered Why Agent Technology for Event Processing, Part 2 was on the Relationship between Agents and Events, and Part 3 was on What goes on inside an Agent? …

Conventional software objects can be thought of as passive, because they wait for a message before performing an operation. Once invoked, they execute their “method” and “rest” until the next message. A current trend in software architecture is to design objects that not only react to events but are also proactive. In UML 2.0, these are known as active objects; in the agent community, they are known as agents. Whether they are called active objects or agents, this new direction is radically changing how we design systems.

The Basic Properties of Agents

At a system level, an agent can be a person, a machine, a piece of software, or a variety of other things. The basic dictionary definition of agent is something that acts (c.f. an actor in some use case). However, for developing business and IT systems, such a definition may be too general. While an industry-standard definition of agent has yet to emerge, most agree that agents in an IT context are not useful without the following three important properties:

- Autonomous – capability to act without direct external intervention. It has some degree of self-control over its internal state and actions based on its own knowledge or rules.

- Interactive – communicates with the environment and other agents, typically via sensors or interfaces and events.

- Adaptive – capable of responding to other agents and/or its environment during interactions. An agent can also modify its behavior based on past events.

In short, a basic working definition useful for IT purposes is:

An agent is an autonomous entity that can adapt to and interact with its environment.

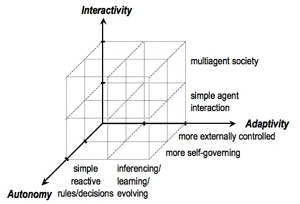

As illustrated in Fig. 1, agents can be autonomous, interactive, and adaptive – to various degrees. It is important to note that these are not “all or nothing” characteristics – there is no “agent minimum specification” qualification list! These three key agent properties will be discussed in more detail below.

Agents are Autonomous

Agents can be thought of as autonomous because each is capable of governing its own behavior (to some extent). Autonomy is best characterized by degrees. At one extreme, an agent could be completely self-governing and self-contained. However, an agent that is fully self-sufficient and does not require the resources of, or interaction with, other entities is very rare-primarily because it would be unmanageably large and unnecessarily complex for any common role. At the other extreme, an agent that is barely able to perform the simplest self-management is of limited use in complex systems. Even traditional objects have some degree of autonomy within their methods.

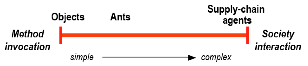

An agent’s level of autonomy can be designed depending on its role. For example in Fig. 2, conventional objects are not completely dependent on outside resources-having some state and behavior of their own. However, they are traditionally simple in that they are far from being completely independent. Because a “supply-chain agent” is able to reach certain conclusions and make decisions on its own, it has more autonomy than an “object”. By their very nature, supply-chain agents require interactions with other entities to enable a fully functional supply-chain system, since one agent can not adequately support an entire organization’s supply-chain processing – interactions are also a characteristic for the class of agents called event processing agents (EPA). Even more autonomous are manager agents, because their role can involve a high degree of internal decision making through activities such as monitoring and delegation, which depend on outside resources.

Agents are Interactive

Interaction implies the ability to communicate with the environment and other entities. As illustrated in Fig. 3, interaction can also be expressed in degrees.

On one end of the scale, method invocations can be seen as the most basic form of interaction. More sophisticated would include those agents that can react to observable events within the environment. For example, food-gathering ants do not invoke methods on each other. Their interaction is indirect through the direct physical effects of their pheromones on the environment. Even more complex interactions are found where agents derive information – complex events – from multiple events over time. Here agents begin to act with “intelligence”. And then there are multiagent systems (MAS) where agents engage in multiple, parallel interactions with other agents. Here agents begin to act as a society.

Finally, the ability to interact becomes most complex when systems involving many heterogeneous agents can coordinate cooperatively and/or competitively through such mechanisms as negotiation and planning.

Agents are Adaptive

An agent is considered adaptive if it can respond to other agents and/or its environment. Entities that fail to adapt to the ever-changing world become extinct: organisms die, companies go out of business, and so forth. For an organization, adaptation enables the system to react effectively to changes in areas such as the market and business environment. For IT systems, adaptation enables systems to react appropriately to enable such effects as system balancing, integrity assurance, and self-healing. The goals and motivations for adaptation are around remaining competitive and useful, and deriving value. When designed properly, individual parts of systems can be empowered to change based on their environment and market conditions.

The primary forms of adaptivity include:

- Simple reaction rules – At a minimum, this means that an agent must be able to react to a simple stimulus-to make a direct, predetermined response to a particular event or environmental signal. Such a response is usually expressed by an IF-THEN form. Thermostats, robotic sensors, and simple events can fall into this category. From atoms to ants, the reactive mode is quite evident. A carbon atom has a rule that states in effect, “If I am alone, I will only bond with oxygen atoms.” Simple reactions can also include business decision rules – such as event-condition-action (ECA) rules.

- Inference rules – Beyond the simple reactive agent is the agent that can appear to reason by following chains of rules. For example, agents can react by making inferences and include patient-diagnosis agents, certain kinds of data-mining agents, and identifying complex events. Such inferences are computed by following a chain of inference rules, which can be described as goal-directed or data and event-driven.

- Learning – Learning is change that occurs during the lifetime of an agent and can take many forms. Popular learning techniques employ reinforcement learning include “credit assignment”, Bayesian and classifier rules, and neural networks. Examples of learning agents would be agents that can approve credit applications, analyze speech, or recognize and track targets.

- Evolution – Evolution is change that occurs over successive generations of agents. A primary technique for agent evolution usually involves genetic algorithms and genetic programming. Here, agents’ adaptive mechanisms can literally be “bred” to fit specific purposes. For example, operation plans, circuitry, and software programs can prove to be more optimal that any product that a human can make in a reasonable amount of time.

Other Agent Properties

In the sections above, three key agent properties were discussed: autonomy, interactivity, and adaptivity. These properties are important because they are essential for agents deployed by IT systems. However, agents may possess various combinations of other properties whose usefulness depends on the application requirements and the agent designer. Here, agent characteristics may include being:

- Sociable – interaction that is marked by friendliness or pleasant social relations, that is, where the agent is affable, companionable, or friendly.

- Mobile – able to transport itself from one environment to another, and geo-aware.

- Proxy – may act on behalf of someone or something, that is, acting in the interest of, as a representative of, or for the benefit of some other entity.

- Intelligent – state is formalized by knowledge (e.g., beliefs, goals, plans, assumptions) and interacts with other agents using symbolic language.

- Rational – able to choose an action based on internal goals and the knowledge that a particular action will bring it closer to its goals.

- Temporally continuous – is a continuously running process.

- Credible – believable personality and emotional state.

- Transparent and accountable – must be transparent when required, yet must provide a log of its activities upon demand.

- Coordinative – able to perform some activity in a shared environment with other agents. Activities are often coordinated via plans, workflows, or some other process management mechanism.

- Cooperative – able to coordinate with other agents to achieve a common purpose; non-antagonistic agents that succeed or fail together. (Collaboration is another term used synonymously with cooperation.)

- Competitive – able to coordinate with other agents such that the success of one agent implies the failure of others (the opposite of cooperative).

- Rugged – able to deal with errors and incomplete data robustly.

- Trustworthy – adheres to Laws of Robotics and is truthful.