Part 3 in a series by James Odell on agent technologies and event processing. Part 1 covered Why Agent Technology for Event Processing, and Part 2 covered the Relationship between Agents and Events. Now over to Jim…

Agents are autonomous entities that can adapt to and interact with their environment. To accomplish this, agents must have some means by which they can perceive their environment and act upon those perceptions.

An agent can be a machine, a piece of software, or a variety of other things (even a person). The basic dictionary definition of agent is something that acts. However, for developing business and IT systems, such a definition is too general. While an industry-standard definition of agent has not yet emerged, most agree that agents deployed for IT systems are not useful without the following three important properties:

- Autonomous – is capable acting without direct external intervention. It has some degree of control over its internal state and actions based on its own experiences.

- Interactive – communicates with other agents and the environment.

- Adaptive – able to change its behavior based on its goals, change in state, and experience.

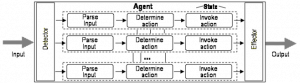

Agent as a black box

At its simplest, each agent can be thought of as an interacting black-box process, where no indication of an agent’s internal structure and behavior is given other than that which is visible from outside the agent. Figure 1 illustrates that agents have input from, and output to, the environment – i.e., it receives and emits events. An agent’s environment can be thought of as everything outside of the agent. For robotic agents, the environment would consist of the terrain, the constraints of motion and control, and other agents. For software agents, the environment would consist of the hosting platform, its operating systems and supporting middleware, and other agents.

Input can be whatever the agent “perceives,” such as messages, requests, commands, and other events. Based on such input, the agent can decide how to act, which typically results in some output from the agent, such as communicating with, or making a change to, its environment. For instance, our Robotic agent could receive a move command, and then change its state through movement (events). An Inventory agent could receive a request for the quantity-on-hand for a given commodity, causing it to determine the requested quantity and send a response event to the requestor. The Inventory agent would also receive notification events regarding inventory being removed and sent to a customer. The agent process would then recompute the quantity-on-hand and decide if a reorder point has been reached, and if so, would send out requests-for-bid to appropriate suppliers.

Agent processing overview

For an agent to interact with its environment, it must be able to not only detect and affect its environment, but also to understand what it is detecting, decide an appropriate response, and to act on it. The internal structure of an agent can be visualized as illustrated in Figure 2.

State

Just like objects, agents can have a form of memory, referred to here simply as state. State includes the data that it needs to operate and is maintained or can accessible in some manner (such as, via a database or automated blackboard) by the agent.

For example, the Inventory agent would need to maintain information such as the reorder point for a particular product or the algorithm needed to compute the reorder point. The agent might also maintain the list of valid product vendors that it can contact for reordering-or at least know where the information might be found, or which other agent it can delegate that request to. The agent could even make inferences about its own state or the state of other entities in its environment. State, then, can be metaphorically interpreted as anything that the agent can “know” or “believe” about itself or its environment.

Detectors

Detectors provide the agent’s interface to the environment. They receive the input events that are of interest the agent. Detectors can both receive input sent to them (i.e. “push” model) or actively scan the environment for it (i.e. “pull” model). Detectors can receive simple messages and event notifications, or they can scan message logs or monitor event occurrences. In particular, event-monitoring agents (in event-driven architectures) may be specialized to recognize particular kinds of state changes in the environment.

For example, master data agents could watch for certain kinds of changes in a database such as metamodel modifications, customer-address changes, or even the database going offline. If any of these events were detected, the agent could notify other agents that can react appropriately, such as the system monitor agent that notifies the DBA. In event-driven architectures (EDA), this is particularly useful. Our robotic agents may employ such sensors as cameras, global positioning hardware, or infrared devices for detectors, and directly process and interpret the associated images and event streams.

Parse input

Once the detector receives input, the agent needs to analyze and “understand” the input. This action typically involves recognition, normalization, and exception handling. For conventional object-oriented software, there is virtually no parsing: the OO “signature’s” the first parameter identifies the object, the second specifies the requested operations, and the remaining input contains the data required for the operation execution. Because the signature syntax is already defined in this manner, the message does not need to go through any “decide action” step. Instead, it goes immediately to invoke its specified method.

In a system with a more complex style of input, the OO approach cannot provide a satisfactory solution. For example, some IT agents need to process complex syntax and receive input in one or more languages, such as SQL, Prolog, BPEL4WS, BPML, or ebXML. They may need to understand complex ontologies expressed in OWL before any further action can be determined against some RDF data. For complex-event-processing agents, this parsing can mean identifying event patterns in the input. Such event pattern recognition is important for agents, especially those that need to recognize many potential new state situations (e.g., self-healing systems).

Granted, activities like parsing require greater complexity than a traditional object. In fact, not all agents will require such sophisticated behaviors. Yet, when system requirements demand richer behavioral control at execution time, agents need to be able to accomplish what traditional objects cannot. Activities like parsing or accepting rule updates extends the notion of the semantic net to enabling the semantic approach to system interaction, in general.

Decide action

As suggested earlier, traditional OO recommends that the method requested of an object be determined before a message is sent to the object, because each object class defines separate methods, one for each kind of message “signature.” If the number of possible methods is tangible, this is not a problem; for those situations requiring hundreds or thousands of possibilities, such an approach is impractical.

For example, insurance companies have hundreds of ways of rating a policy; retailers and shipping companies have an equally large number of rules and procedures for pricing a product or service. To support this need in an agile and adaptive manner, the agent also needs the ability to determine the appropriate method after it receives a request for service. In this way, a Policy agent can decide on the appropriate method to rate a policy based on the requestor’s needs. Order agents can decide the appropriate pricing algorithm when the customer submits order.

Another function within the Decide Action step could be to determine the appropriate goal for further consideration. For example, an Inventory agent would detect each time a product was pulled from a warehouse bin, and for each such instance it might need to first decide if the product should be reordered or, if it is selling too slowly, discontinue the product altogether. Each of these goals requires a different process. One might involve sending out and processing bids from other agents to refill the bin; the other might notify customer agents that the product will no longer be available, and await responses.

The Decide Action process might involve a high degree of complex processing in its own right. Can this be accomplished using an OO approach? Absolutely. However, some agent platforms have mechanisms that support higher order models of decisions directly- for example using rule engines instead of having the programmer do extra work in design, construction, testing, and maintenance.

Invoke action

Once selected, the appropriate action needs to be invoked. The action execution may involve actions internal to the agent, in which case, message events can be sent directly to the agent’s own detectors. If the action involves other agents, the message events need to be sent to them. The agent controls its autonomy by using its own resources or having them provided by other agents. It determines what it can do and what it must delegate. In this sense, the agent manages itself-rather than just being managed.

In addition, some agents can determine when their internal actions are not functioning as expected. The agent can also communicate to itself that the situation needs to be remedied-by sending itself a message and letting some Decide Action step determine which action should be taken next. In other words, the agent can have its own self-aware processes. In a business process management system, such mechanisms can be handled in a distributed manner using events and agents, rather than by some centralized controller that will not scale under large processing volumes.

Effectors

When messages need to be sent or events posted outside the agent, effectors are the connection with the agent’s environment. It is the mechanism an agent uses to interact with and mobilize within the environment. This could involve sending messages though a message bus or activating a hardware device such as a printer or robot arm.

Agents can be multiprocessing

Agents can be single threaded or designed to process input concurrently. (Figure 3) Agents that can support concurrency might also have a process controller that coordinates the various internal actions in some manner.

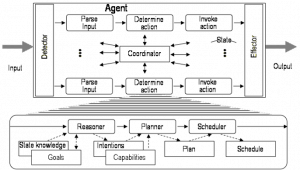

Reasoning, planning, and scheduling

A common approach for agents can involve reasoning, planning, and scheduling. (Figure 4) In this approach, an agent can determine the appropriate action by having a reasoner step consider what it knows about its state and the state of its environment (including that of the service requestor). Additionally, the agent reasoner can identify the appropriate goals for acting based on the detected input and state knowledge. Here, the agent may optionally weigh the importance of certain goals (when there are several or conflicting goals to choose from).

For example, a Scheduling agent may have a goal to ensure that all the tasks in its care are executed on time. Another goal might be that certain priorities must be given to important tasks. The two goals, however, may conflict when priority tasks cause the delay of other tasks. Here, the agent may have to weigh the cost of both and determine which service it intends to perform under a given constraint.

A planner then determines the actual steps needed to execute the agent’s intension. For example, the scheduling agent may need to ensure a particular product is created for given order. The agent may need a make-or-buy decision resulting in a process that either fabricates the product in-house or purchases a fabricated item from an external source. Some agent planners dynamically assemble the planned set of steps to fit each occasion; others have a library of prefabricated processes from which to choose.

The planned process is then sent to the agent’s scheduler to determine which resources will be assigned and when the process will be scheduled [see note 1]. On top of this, an agent may also employ a coordinator that ensures that the agent’s concurrent processing works harmoniously: managing roles and conflicts, as well as optimizing the overall functioning of the agent.

What’s next?

The next “CEP and Agents” blog entry will address agent composition, in general. This blog post surveyed the kinds of functionality that agents can possess internally; the next blog will explore how agent functionality can be physically rendered. For example, every cell in your body consists of many components, such as those in Figure 5, right.

Using nature as an analogy, then, agents can have multiple internal components that provide it overall behavior – just like those illustrated in Figure 4. Furthermore, some of the cell components can, themselves, be cells in their own right. For example, Mitochondrion are cells, yet they live within other cells for the purpose providing energy to its host. Similarly, the internal functions for the agent illustrated in Figure 4, may be agents in their own right. For example, the Detector capability in Figure 4 may be implemented as a TIBCO Hawk agent (which specializes in system event detection). In other words, the internal agent functionality can be provided by a combination of mechanisms, such as program modules, components, or first class agents.

Also, by considering composition in the opposite direction, we find multicellular forms of life such as sea coral, bodily organs and so on. And if we continue in this direction, we find even more large and intricate systems, including humans, human social groups and organizations, and ecosystems. The same analogy applies to agents. As we just learned, an agent can consist of other agents. By continuing this pattern, such grouping is a way of progressively modularizing multiple agents into structures such as teams, organizations, societies – and, yes, even agent ecosystems.

____________________________

[Note 1: Agents using this approach are sometimes referred to as “BDI” agents, because they employ beliefs, desires, and intentions. Beliefs refer to what the agent “believes” the state of its environment and other agents to be; desires basically refer to the goals the agent could attempt to achieve, and intentions refers to the set of goals the agent actually intends to achieve under a given circumstances.]