Part 2 in a series by James Odell on agent technologies and event processing. Part 1 covered Why Agent Technology for Event Processing. Now over to Jim…

Let’s start with the situation where a tree falls in the forest when no one is there to see or hear it. If there is no mechanism to sense, notify, or record the occurrence of the tree’s state change, is there really an event? Since no knowledge of the event will exist, it will be, well, a non-event.

However, we don’t always need people to serve as event processors. Off-the-shelf sensors and traditional software could easily provide event processors that can sense, notify, or record such events. So, why do we need agent technology here? The answer centers on the number and complexity of events that need to be processed. Simple events that trigger a process can be supported by conventional means.

However, what if we change our perspective from a simple tree-falling-in-the-forest to our buzzing world of daily business communications. Business communications are numerous and often complex. One communication could be monitored by thousands, and then filtered, analyzed, and aggregated with other communications – which in turn can become noteworthy events for others, and so on. As this continues to grow in size and complexity, our ability to understand, analyze, and control can quickly go beyond the abilities of conventional technology. Here is where agents can step in.

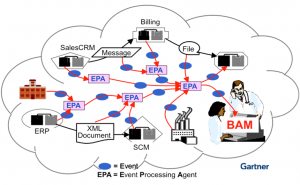

For example, the figure right from Roy Schulte (Gartner) is a token representation of a complex event processing environment. On the left, you can see an even processing agent (EPA) receiving two events. As a result of these two events, the EPA may determine that another event should (or should not) occur. For instance, a Buy and Sell event for a particular customer’s stock might have occurred. If the Sell event occurred prior to the Buy, trading-error event could be generated. This event might be one of three events that another EPA was monitoring, resulting in another event. Which, in turn, are monitored by another EPA, resulting in one of perhaps two events, depending on results of its analysis.

For example, the figure right from Roy Schulte (Gartner) is a token representation of a complex event processing environment. On the left, you can see an even processing agent (EPA) receiving two events. As a result of these two events, the EPA may determine that another event should (or should not) occur. For instance, a Buy and Sell event for a particular customer’s stock might have occurred. If the Sell event occurred prior to the Buy, trading-error event could be generated. This event might be one of three events that another EPA was monitoring, resulting in another event. Which, in turn, are monitored by another EPA, resulting in one of perhaps two events, depending on results of its analysis.

So, why use agents? Why not use a large brain-like approach that would solve the event processing centrally, such as using an expert system or neural network? You could – up to a point. However, when your CEP starts requiring thousands of rules to be processed, maintaining a single expert system would quickly become unwieldy to maintain and test. So, another approach is to divide the expert systems into smaller, manageable-yet federated-expert systems. This would be tangible up to a dozen or so expert systems, but what happens then? Would you understand and manage them? If your expert systems each had a particular business-based theme, you might be able to manage them because there would be some rhyme or reason for the rule grouping. The result, then, is a set of smaller yet federated set of event-processing business-organized “brains.” But again, as each expert system becomes too large, it too must be partitioned in multiple, business-based, smaller ones.

So, where does this all stop? As an organization – particularly a global one – expends or changes, this constant divide-and-conquer approach will not stop. The agent approach, then, offers a more sane alternative: start with small business-based units to begin with.

Agents are autonomous and interactive entities that are defined on the basis of a specific need. They may play multiple roles, learn, and evolve if need be. They may behave socially and self-organize. In other words, they can be as adaptable as required to be able to service specialized needs and communicate with other agents. Just like social beings, they can have the ability to understand, analyze, and control their specific niche.

Agents, then, are a way to “divide and conquer” the problem, much as humans (and other organisms) do. It’s still software, but it’s modularized on a business-needs basis, and can be thought of as small-granularity “intelligent objects.” They can mimic social configurations and interactions and can scale and perform as an organization. Leveraging agents in this manner, then, enables event processing that is concurrent and distributed. Does this sound like the living, breathing world around us? It should. This is why agent-based applications are able to better manage complex systems and adapt to change.

So, what is the relationship between agents and events? Agents enable event processing networks to scale in size and complexity — and be designed in a more human-understandable manner. Agents can be used for monitoring, filtering, analyzing, and aggregating events – before notifying other agents. As Paul Vincent indicated in his earlier blogs, agents can also be used within the CEP middleware infrastructure, combining source event distribution with agent communication.