One analogy for Big Data analysis is to compare your data to a large lake. I don’t mean a little subdivision runoff pond, but rather a lake fit for skiing and other water sports. Trying to get an accurate size of this lake down to the last gallon or ounce is virtually impossible.

Getting to the Bottom of Big Data

This example is like many Big Data problems. It is virtually impossible, or at least not cost effective with traditional tools, to get an accurate understanding of some data sets. To tackle such problems, companies have begun turning to tools like Hadoop, Hive, Pig, Cassandra, and ZooKeeper.

The challenge with these new tools is they become new sources of IT spend within the organization. These tools develop a life of their own with dedicated hardware and personnel. The answers that come out tend to be answers at one point in time. As time continues, the answer becomes less and less accurate.

Let’s go back to the lake analogy. To make it a bit easier, let’s assume that no precipitation falls directly on the lake, and no evaporation comes off the lake as these real-life situations don’t map to an IT paradigm. Now let’s assume that you have built a big water counting machine (just like your company built out an Hadoop cluster). You feed all of the water in the lake through your big water counting machine, and it tells you the number of ounces of water in the lake… for that point in time.

The problem is that the volume constantly changes. Creeks, streams, and rivers flow into the lake and perhaps even one or two flow out. The very next minute after your machine gives you the answer, your answer will no longer be 100% correct. The longer from the time of counting, the more inaccurate the answer will become.

Understanding How to Keep Your Info Valuable

This phenomenon is well-known to TIBCO; we understand that the value of the answer decays with time. As a real-time data processing company, we know that the true value of information is to get that information to the correct person, in time for that person to react.

If we put small water counting machines at each creek, stream, or river flowing into and out of this lake, we could measure all of the water flowing into or out of this lake. At any time, we could combine this streaming information with the static information produced by our big water machine. Thus, providing us with an accurate measurement of the lake regardless of when we did the big count. Our count would always be accurate. Our measurement would be:

Current_answer = Historic_information_answer + new_information_answer

Historic_information_answer is the big water machine and new_information_answer is the combination of all the streaming water that is going to and from the lake.

This will allow us to repurpose our big water counting machine to analyze a different lake. Similarly, in the corporate world, we can repurpose our Big Data tools—such as Hadoop clusters—to solve new problems and never have to solve a problem twice.

Perhaps the business needs to know the answer to the following question:

What are the top five products sold with XYZ widgets in the past 365 days when the order volume is greater than $300, and the customer is paying with cash?

This would be a great question for Hadoop to answer once. However, if the business needs to know this as a rolling window of 365 days, then it is an inefficient waste of Hadoop cluster resources. A much more effective solution is to have Hadoop solve the problem once and then have smaller, more efficient engines monitor the point-of-sale (POS) data for this situation. By adding the POS streaming data to the Hadoop pre-calculated answer, you will always have the correct answer to the question without wasting precious resources.

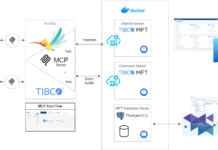

Different than our lake example, the smaller engines are not smaller Hadoop clusters. Rather, we are using an enterprise services bus that has been extended with complex event processing—a combination that we sometimes call an event services bus. This event services bus can be easily programmed to monitor all of the streams of data in the corporation to look for the same filter that the Hadoop cluster calculated. When it finds the same situation, it can easily combine the new streaming answer with the existing static answer from Hadoop.

This architecture allows a more flexible solution to Big Data problems in a way that maximizes the investment of the IT resources. The Big Data tools can be easily redeployed to solve many problems with the ongoing maintenance of the result done by the very infrastructure of the company.

Explore our Integration Maturity Model to better understand how to leverage your Big Data.