When customers are looking for ways of dealing with imperfect (substitute your own expletive here) data, there are five major factors that should be considered. You will find many competing claims about approaches and algorithms, but at the end of the day, my (completely unbiased) view about evaluation criteria is:

1. First and foremost, it’s all about accuracy – here we could talk about specificity and sensitivity analysis and other statistical mumbo-jumbo, but for simplicity, let’s just focus on accuracy – that is measuring how close the system can come to reaching the same conclusions as a domain expert when faced with the same data.

2. It’s about scalability – dealing with big data. How easily can the system you select deal with increasingly large volumes of data and workloads?

3. To any organization doing business in and across country or cultural boundaries, being able to deal with any type of data in any language should be a key criteria. Systems are global and need to deal with data about many different types of entities – not just customers and product data – and do so in a way that is independent of language.

4. Data comes from so many different systems and sources that being able to easily configure requests to deal with whatever comes along is a must-have. So make sure you review the options provided to fine tune requests which can easily achieve the desired results.

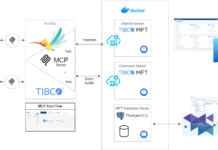

5. Finally, of course, is seeing how easily the system can be integrated with existing and future applications, processes and tools that run your business. This involves looking at two main areas: What native language support is provided? How is that integration achieved? Then, make sure it will work effectively with your ESB, SOA, BPM andCEP products.