EbizQ is running a series of articles (first here and second here, curiously classified under “Social BPM”) on “dynamic BPM”, quoting luminaries such as Gartner’s Janelle Hill and Jim Sinur, and Forrester’s Clay Richardson.

EbizQ is running a series of articles (first here and second here, curiously classified under “Social BPM”) on “dynamic BPM”, quoting luminaries such as Gartner’s Janelle Hill and Jim Sinur, and Forrester’s Clay Richardson.

In the simplest terms, [dynamic BPM] supports rapid “on the fly” process adjustments. “You can respond to emerging conditions and changing business needs, in some cases without any interruption to IT or without having IT get involved,” explains Jim Sinur…

Dynamic BPM is described as having various levels of complexity:

- rule/decision-based selection of processes / process paths – see (good old) decision management / rule technology

- dynamic configuration of parts of processes – usually a variation of the above, with decision rules determining what tasks to do and in which order

- goal-driven processes – selection of processes and process tasks is entirely based on some mechanism for addressing a route to a goal

So lets look at some of the examples mentioned:

- railroads using RFID sensors to monitor passing traincars (a.k.a. “sense and respond”)

- complex algorithms to determine where to deploy troops (normally requiring “situation awareness”)

- government document tracking (a.k.a. “track and trace”)

- pharmaceutical and fragrance design process involving ad hoc partner participation (i.e. “social BPM”)

For sure, CEP and event processing is at least a “major contributor” and in reality probably the solution for the “sense and respond” and “track and trace” application areas. And it helps with “situation awareness” in the real-time domain.

What is fascinating is that the term “dynamic BPM” seems to really mean “any type of process that is not associated with simple, fixed task orchestration” – in other words, “non-BPM” technologies like CEP. One perspective is that one of the main drivers for processes to be “dynamic” is the ability to respond to change – i.e. events. Indeed, the need is for many business processes to take account of there being larger quantities and higher rates of events that can be deemed important. Another is to use more sophisticated solutions to solve or optimise more complex problems – think of classical planning and scheduling algorithms and predictive analytics, for example.

Probably the “dynamic BPM complexity levels” described in the article can be mapped to the following, based on increasing levels of event-handling capability:

- pre-defined, late-selected process paths: subprocesses are pre-defined

- no-predetermined-path processes, where rules or more complex algorithms determine what tasks need to take place – sets of tasks are allocated based on conditions and events: tasks are predefined

- goal-oriented and semantic processes, where goal states are reached by the dynamic selection of tasks based on current events and situation: tasks may be constructed on-the-fly, and processes and plans changed according to the situation and events.

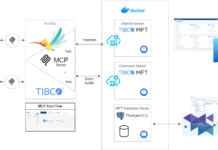

There are other viewpoints as well – for example, adaptive case management (for non-automated, changeable processes), currently the subject of an OMG standardisation effort. Related to things like goal-driven fulfillment technologies like TIBCO AF and AC. Perhaps we are returning to – dare I say it – knowledge-based processes ?

I leave the final words to a quote:

…according to Gartner Inc: “By 2013, dynamic BPM will be an imperative for companies seeking process efficiencies in increasingly chaotic environments.”