The Information Delivery Paradigm and Decision Latency: Real World vs. Business World

As consumers, we have grown to expect that information will be pushed to us in a timely, relevant, and contextual fashion. We learn about...

Decision Latency: Solving The Big Data Analytics Oversight

Big Data analytics are everywhere. According to a recent Forbes article, companies have now “purchased tools, hired solid analytic teams, and often tried to...

Introduction to Fast Data and TIBCO BusinessEvents

The industry is moving toward “digital business,” where billions of people and things interact with one another in real time across the globe. Hear...

TIBCO Podcast: Live Streaming Data—How to Analyze, Anticipate, and Act in Real Time

TIBCO's own Mark Palmer, SVP, integration and event processing, sits down with technology journalist Ellis Booker to discuss the evolution of live streaming data. Proponents of Big...

Fast Data. Continuous Answers. Real-Time Action.

Have you discovered a revolutionary in-memory technology that helps businesses query live streaming data? TIBCO Live Datamart applies predictive analytics for decision-making in real...

Creating Your First Live Datamart Hands-On

To close our video series marking the release of TIBCO Live Datamart 2.0, this final developer video is going to show you exactly what...

Build Your Own Interface with JavaScript APIs

In the modern world of ever-changing Fast Data, users need an information delivery platform that’s nimble and responsive enough to keep pace with the...

Get Your First Taste of Live Datamart 2.0

TIBCO recently launched Live Datamart 2.0, the industry's first data mart for Fast Data, that helps businesses capture value from streaming data and respond to...

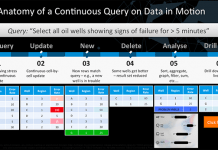

How to Query the Future: A Moment in the Life of a Continuous Query...

The foundation of Digital Business is Fast Data—data that’s in motion, emitted by connected products and services like streams of events coming from your...

TIBCO StreamBase + Hadoop + Impala = Fast Data Streaming Analytics

Apache Hadoop was built for processing complex computations on Big Data stores (that is, terabytes to petabytes) with a MapReduce distributed computation model that...